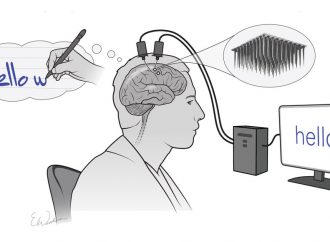

The Brown University researchers used a brain-computer interface to reconstruct neural signals into English words.

Source: Interesting Engineering

People with hearing loss may someday be able to speak clearly thanks to work from researchers at Brown University.

They were able to take the neural signals in the brains of rhesus macaques, a species of Old World monkeys, and translate that into English words using a brain-computer interface.

The work could pave the way for brain implants to help the hearing impaired.

Non-human primates process sounds the same as humans

“The overarching goal is to better understand how sound is processed in the primate brain,” said Arto Nurmikko, a professor in Brown’s School of Engineering, a research associate in Brown’s Carney Institute for Brain Science and senior author of the study, in a press release highlighting the work. “Which could ultimately lead to new types of neural prosthetics.”

According to the team of researchers, brain systems of humans and non-human primates is the same during the initial processing phase. It occurs in the auditory cortex, sorting out sounds based on things like pitch or tone. The sounds are then processed in the secondary auditory cortex. It’s there where sounds are distinguished as words.

That information is then sent to different parts of the brain for processing and then speech. While non-human primates aren’t likely to understand what words mean researchers wanted to learn how they process words.

To do that they recorded the activity of neurons of the rhesus macaques while they listened to recordings of individual English words and macaque calls.

The recordings mark a first

The researchers relied on two pea-size implants with 96 channel microelectrode arrays to get the recordings.

The rhesus macaques were able to hear one and two-syllable words including tree, good, north, cricket, and program. It was first-time scientists were able to record complex auditory information thanks to the multielectrode arrays.

“Previously, work had gathered data from the secondary auditory cortex with single electrodes, but as far as we know this is the first multielectrode recording from this part of the brain,” Nurmikko said. “Essentially we have nearly 200 microscopic listening posts that can give us the richness and higher resolution of data which is required.”

RNNs outperformed traditional algorithms

Part of the study was focused on determining which decoding model algorithm performed better. Jihun Lee, a doctoral student in collaboration with Wilson Truccuolo, a computational neuroscience expert, found the recurrent neural networks or RNNs produced the highest-fidelity reconstructions.

It “substantially” outperformed traditional algorithms that had been effective in decoding neural data from other areas of the brain. The goal is to someday develop neural implants that could help restore hearing to people.

“The aspirational scenario is that we develop systems that bypass much of the auditory apparatus and go directly into the brain,” Nurmikko said.

“The same microelectrodes we used to record neural activity in this study may one day be used to deliver small amounts of electrical current in patterns that give people the perception of having heard specific sounds.” Their work was published in journal Nature Communications Biology.

Source: Interesting Engineering

Leave a Comment

You must be logged in to post a comment.