Autonomous vehicles have long been seen as a major security issue, but experts say they’re less vulnerable to hacks than human-controlled vehicles

Self-driving cars feel like they should provide a nice juicy target for hackers.

After all, a normal car has a driver with their hands on the wheel and feet on the pedals. Common sense suggeststhis provides a modicum of protection against a car takeover which a self-driving car, or even one with just the sort of assisted driving features already found on the road today, lacks.

But that’s the wrong way round, says Craig Smith, a security researcher and car hacker. “One interesting thing about fully self-driving cars is they’re unintentionally more secure, which is really not what you would expect at all.”

Alongside his day job as the head of transportation research at security firm Rapid7, Smith runs the Car Hacking Village at Defcon, the world’s largest hacking convention, in Las Vegas.

Spend any time there, and you’ll start giving sideways glances to anything weird your car does. Now in its third year, the village is the nexus of a community capable of remotely hacking into a jeep to cut the breaks and fooling a Tesla’s autopilot into thinking there’s a phantom pillar in front of it.

At first automotive manufacturers saw hackers the way much of the world still does: irritations at best, and hardened criminals at worst, maliciously trying to break their products and endanger the world.

These days attitudes have softened , with hackers seen as potential allies, or at least uneasy partners, in the war against cybercrime. The bugs that let hackers into a car are there from the start; it’s better if someone like Smith finds them rather than an unscrupulous gangster who wants to start experimenting with in-car ransomware.

The most obvious example of the thawing of attitudes was a Mazda 2 rolled in by the Japanese carmaker for the denizens of Defcon to have their way with. But it’s only halfway there, Smith warns: “I like that Mazda’s here, but everybody has a lot of work to do in this particular field, in terms of being more open about their stuff.”

Mazdas are good cars to learn from, he explains, because all the electronic systems send instructions around the car through one bottleneck, the high-speed bus. In most cars, the bus is reserved for the most safety critical messages, such as steering or braking, meaning it’s not really the sort of thing you want to mess around with. But you can learn how the bus works by fiddling with something as mundane as the windscreen wiper commands on a Mazda.

Those sorts of considerations still form the bread and butter of car hacking research right now. The inner workings of most cars are obfuscated, complex and locked up, leaving researchers struggling to even understand what an in-car computer looks like when it’s working, let alone how to start pushing the boundaries of what it can do.

Obscurity might bring safety for a while, but it also renders security research expensive and time-consuming. The really dangerous flaws are likely to be discovered, not by hobbyist researchers, but by those who stand to make money from hacking.

One of the biggest stories from the sector in 2015 and 2016 was the hacking of a Jeep. In 2015, two researchers from IOActive discovered a way to hack their way from the internet-connected entertainment system of the car into the low-level system which controls the vehicle.

That let them send commands to brake or steer the car wirelessly prompting a mass recall of affected models. In 2016 the pair presented an update at Defcon that illustrated just how much more they could have done after slowly reverse engineering the Jeep’s low-level system.

They went from only being able to break control the car when it was travelling less than 5 miles per hour, for instance, to being able to control the speed of the car at will.

That distinction is also why Smith is counterintuitively optimistic about the future of cars, as they move from human- to computer-controlled. Smith explains:

“The way cars work today is you have a few sensors. You can see how they work in a lot of commercials: a car is backing up, or parking, it sees a kid in a driveway, and it stops.

“You have one signal coming in, saying ‘I see an object, stop the car’. It’s a computer overriding a human. The human is saying ‘I’m going to drive, I’m giving it gas’, and not only is it ignoring it, the car is doing the opposite of what the human says. ‘That’s nice, I’m going to stop’.”

Those signals are sent through that same low-level system that hackers have been penetrating for years. It is called the Can bus, short for Controller Area Network. It’s generally the case that once a hacker has access to it, the rest of the game is largely reverse-engineering what signals are sent when. As one security engineer for a major car firm joked, “it’s called the can bus, not the can’t bus, because once you’ve got access to it, there’s nothing you can’t do”.

Smith explains that from a hacker’s point of view having just one sensor makes it much easier to fake a signal or event to fool the car into doing something. But self-driving cars are, by and large, smarter. Smith said: “In a self-driving world, fully self-driving, they have to use lots of different sensors.”

That’s because simple proximity-detection doesn’t cut it if you need a car to drive in different conditions and through different streetswithout human intervention. How, for example, do you tell the difference between a pile of leaves lying in the road – safe to drive through – and a child who has fallen off their bike? No single-reading depth detector can tell you that.

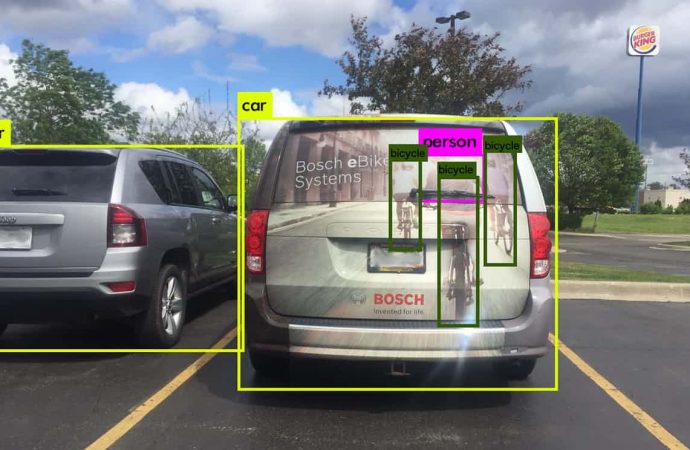

“Each of the sensors used in autonomous driving comes to solve another part of the sensing challenge,” said Danny Atsmon, the head of self-driving vehicle testing company Cognata, who explained that cameras on autonomous cars have been confused by an RV with an image of a landscape on it or by a car with a picture of a bike.

“Lidar can not sense glass, radar senses mainly metal and the camera can be fooled by images, hence, the industry decided on a sensor redundancy and sensor fusion approach to solve that, but each of the edge cases that we present here raises the bar,” said Atsmon.

That fusion of sensor doesn’t just help get a better picture of the world, it also, accidentally, solves part of the security problem.

“The interesting thing that happens is that each sensor doesn’t trust the other,” Smith says. The radar no longer has the ability to bring the car to a juddering halt, for instance, because the camera and Lidar might overrule its findings. To convincingly fake all the systems at once, you can’t just feed in a few false signals: you have to model an entirely fictitious world.

“It’s way closer to the way humans figure out whether something is an illusion or not. And that’s harder for a hacker to deal with. Even the most secure corporate networks tend not to take that sort of approach: once you’re in the secure zone, they assume you’re one of the good guys.”

The future won’t be a hack-free heaven. Software is complex, mistakes happen, and there are other ways to sneak through the code of self-driving cars – even if that entails hacking, not the car, but the world itself. Take the researchers who printed fake Stop signs that are readable by a human but not a car.

But it looks like sitting in a car controlled by a computer may at least be safer than sitting in a car that thinks it’s controlled by you.

That’s certainly true in my case. I can’t drive. The sooner this stuff gets solved, the better.

Source: The Guardian

Leave a Comment

You must be logged in to post a comment.