As robots start to enter public spaces and work alongside humans, the need for safety measures has become more pressing, argue academics

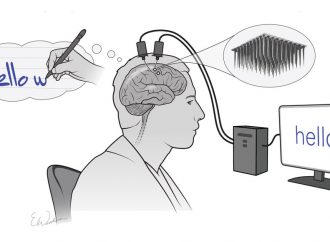

Robots should be fitted with an “ethical black box” to keep track of their decisions and enable them to explain their actions when accidents happen, researchers say.

The need for such a safety measure has become more pressing as robots have spread beyond the controlled environments of industrial production lines to work alongside humans as driverless cars, security guards, carers and customer assistants, they claim.

Scientists will make the case for the devices at a conference at the University of Surrey on Thursday where experts will discuss progress towards autonomous robots that can operate without human control. The proposal comes days after a K5 security robot named Steve fell down steps and plunged into a fountain while on patrol at a riverside complex in Georgetown, Washington DC. No one was hurt in the incident.

“Accidents, we hope, will be rare, but they are inevitable,” said Alan Winfield, professor of robot ethics at the University of the West of England in Bristol. “Anywhere robots and humans mix is going to be a potential situation for accidents.”

In May last year, a man was killed when his Tesla Model S was involved in the world’s first fatal self-driving car crash. Behind the wheel, Joshua Brown had handed control to the vehicle’s Autopilot feature. Neither he nor the car’s sensors detected a truck that drove across his path, resulting in the fatal collision.

An investigation by the US National Highways and Transport Safety Agency blamed the driver, but the incident led to criticisms that Elon Musk’s Tesla company was effectively testing critical safety technology on its customers. Andrew Ng, a prominent artificial intelligence researcher at Stanford University, said it was “irresponsible” to ship a driving system that lulled people into a “false sense of safety”.

Winfield and Marina Jirotka, professor of human-centred computing at Oxford University, argue that robotics firms should follow the example set by the aviation industry, which brought in black boxes and cockpit voice recorders so that accident investigators could understand what caused planes to crash and ensure that crucial safety lessons were learned. Installed in a robot, an ethical black box would record the robot’s decisions, its basis for making them, its movements, and information from sensors such as cameras, microphones and rangefinders.

“Serious accidents will need investigating, but what do you do if an accident investigator turns up and discovers there is no internal datalog, no record of what the robot was doing at the time of the accident? It’ll be more or less impossible to tell what happened,” Winfield said.

“The reason commercial aircraft are so safe is not just good design, it is also the tough safety certification processes and, when things do go wrong, robust and publicly visible processes of air accident investigation,” the researchers write in a paper to be presented at the Surrey meeting.

The introduction of ethical black boxes would have benefits beyond accident investigation. The same devices could provide robots – elderly care assistants, for example – with the ability to explain their actions in simple language, and so help users to feel comfortable with the technology.

The unplanned dip by the K5 robot in Georgetown on Monday is only the latest incident to befall the model. Last year, one of the five-foot tall robots knocked over a one-year-old boy at Stanford Shopping Center and ran over his foot. The robot’s manufacturers, Knightscope, have since upgraded the machine. More recently it emerged that at least some humans might be fighting back. In April, police in Mountain View, home of Google HQ, arrested an allegedly drunken man after he pushed over a K5 robot as it trundled around a car park. One resident reportedly described the assailant as “spineless” for taking on an armless machine.

Source: The Guardian

Leave a Comment

You must be logged in to post a comment.