Betelgeuse, the bright star in the constellation of Orion, has been fascinating astronomers in the recent months because of its unusually strong decline in brightness. Scientists have been discussing a number of scenarios trying to explain its behavior. Now a team led by Thavisha Dharmawardena of the Max Planck Institute for Astronomy have shown that most likely unusually large star spots on the surface of Betelgeuse have caused the dimming. Their results rule out the previous conjecture that it was dust, recently ejected by Betelgeuse, which obscured the star.

Source: Phys.org

Red giant stars like Betelgeuse undergo frequent brightness variations. However, the striking drop in Betelgeuse’s luminosity to about 40% of its normal value between October 2019 and April 2020 came as a surprise to astronomers. Scientists have developed various scenarios to explain this change in the brightness of the star, which is visible to the naked eye and almost 500 light years away. Some astronomers even speculated about an imminent supernova. An international team of astronomers led by Thavisha Dharmawardena from the Max Planck Institute for Astronomy in Heidelberg have now demonstrated that temperature variations in the photosphere, i.e. the luminous surface of the star, caused the brightness to drop. The most plausible source for such temperature changes are gigantic cool star spots, similar to sunspots, which, however, cover 50 to 70% of the star’s surface.

“Towards the end of their lives, stars become red giants,” Dharmawardena explains. “As their fuel supply runs out, the processes change by which the stars release energy.” As a result, they bloat, become unstable and pulsate with periods of hundreds or even thousands of days, which we see as a fluctuation in brightness. Betelgeuse is a so-called Red Supergiant, a star which, compared to our sun, is about 20 more massive and roughly 1000 times larger. If placed in the center of the solar system, it would almost reach the orbit of Jupiter.

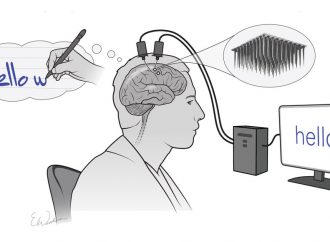

Because of its size, the gravitational pull on the surface of the star is less than on a star of the same mass but with a smaller radius. Therefore, pulsations can eject the outer layers of such a star relatively easily. The released gas cools down and develops into compounds that astronomers call dust. This is why red giant stars are an important source of heavy elements in the Universe, from which planets and living organisms eventually evolve. Astronomers have previously considered the production of light absorbing dust as the most likely cause of the steep decline in brightness. Light and dark: These high-resolution images of Betelgeuse show the distribution of brightness in visible light on its surface before and during its darkening. Due to the asymmetry, the authors conclude that there are huge stars pots. The images were taken by the SPHERE camera of the European Southern Observatory (ESO). Credit: ESO / M. Montargès et al.

To test this hypothesis, Thavisha Dharmawardena and her collaborators evaluated new and archival data from the Atacama Pathfinder Experiment (APEX) and the James Clerk Maxwell telescope (JCMT). These telescopes measure radiation from the spectral range of submillimeter waves (terahertz radiation), whose wavelength is a thousand times greater than that of visible light. Invisible to the eye, astronomers have been using them for some time to study interstellar dust. Cool dust in particular glows at these wavelengths.

“What surprised us was that Betelgeuse turned 20% darker even in the submillimeter wave range,” reports Steve Mairs from the East Asian Observatory, who collaborated on the study. Experience shows that such behavior is not compatible with the presence of dust. For a more precise evaluation, she and her collaborators calculated what influence dust would have on measurements in this spectral range. It turned out that indeed a reduction in brightness in the sub-millimeter range cannot be attributed to an increase in dust production. Instead, the star itself must have caused the brightness change the astronomers measured.

Physical laws tell us that the luminosity of a star depends on its diameter and especially on its surface temperature. If only the size of the star decreases, the luminosity diminishes equally in all wavelengths. However, temperature changes affect the radiation emitted along the electromagnetic spectrum differently. According to the scientists, the measured darkening in visible light and submillimeter waves is therefore evidence of a reduction in the mean surface temperature of Betelgeuse, which they quantify at 200 K (or -73 °C).

“However, an asymmetric temperature distribution is more likely,” explains co-author Peter Scicluna from the European Southern Observatory (ESO). “Corresponding high-resolution images of Betelgeuse from December 2019 show areas of varying brightness. Together with our result, this is a clear indication of huge star spots covering between 50 and 70% of the visible surface and having a lower temperature than the brighter photosphere.” Star spots are common in giant stars, but not on this scale. Not much is known about their lifetimes. However, theoretical model calculations seem to be compatible with the duration of Betelgeuse’s dip in brightness.

We know from the sun that the amount of spots increases and decreases in an 11-year cycle. Whether giant stars have a similar mechanism is uncertain. An indication for this could be the previous brightness minimum, which was also much more pronounced than those in previous years. “Observations in the coming years will tell us whether the sharp decrease in Betelgeuse’s brightness is related to a spot cycle. In any case, Betelgeuse will remain an exciting object for future studies,” Dharmawardena concludes.

Source: Phys.org

Leave a Comment

You must be logged in to post a comment.