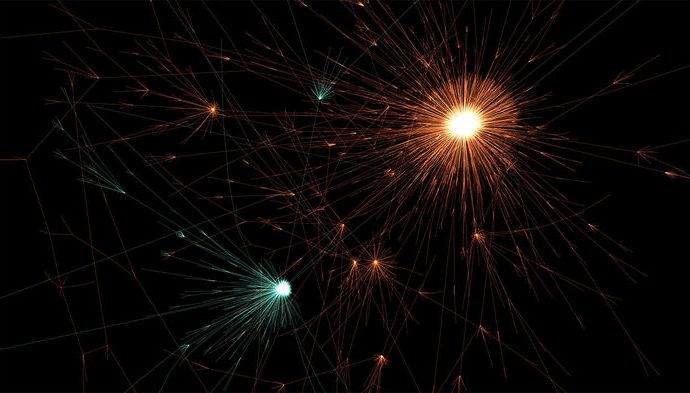

Tweets containing false news (depicted in orange in this data visualization) spread to more people through Twitter than tweets containing true news (teal)

During the 2016 U.S. presidential election, the internet was abuzz with discussion when reports surfaced that Floyd Mayweather wore a hijab to a Donald Trump rally, daring people to fight him. The concocted story started on a sports comedy website, but it quickly spread on social media—and people took it seriously.

From Russian “bots” to charges of fake news, headlines are awash in stories about dubious information going viral. You might think that bots—automated systems that can share information online—are to blame. But a new study shows that people are the prime culprits when it comes to the propagation of misinformation through social networks. And they’re good at it, too: Tweets containing falsehoods reach 1500 people on Twitter six times faster than truthful tweets, the research reveals.

Bots are so new that we don’t have a clear sense of what they’re doing and how big of an impact they’re making, says Shawn Dorius, a social scientist at Iowa State University in Ames who wasn’t involved in the research. We generally think that bots distort the types of information that reaches the public, but—in this study at least—they don’t seem to be skewing the headlines toward false news, he notes. They propagated true and false news roughly equally.

The main impetus for the new research was the 2013 Boston Marathon bombing. The lead author—Soroush Vosoughi, a data scientist at the Massachusetts Institute of Technology in Cambridge—says after the attack a lot of the stuff he was reading on social media was false. There were rumors that a student from Brown University, who had gone missing, was suspected by the police. But later, people found out that he had nothing to do with the attack and had committed suicide (for reasons unrelated to the bombing).

That’s when Vosoughi realized that “these rumors aren’t just fun things on Twitter, they really can have effects on people’s lives and hurt them really badly.” A Ph.D. student at the time, he switched his research to focus on the problem of detecting and characterizing the spread of misinformation on social media.

He and his colleagues collected 12 years of data from Twitter, starting from the social media platform’s inception in 2006. Then they pulled out tweets related to news that had been investigated by six independent fact-checking organizations—websites like PolitiFact, Snopes, and FactCheck.org. They ended up with a data set of 126,000 news items that were shared 4.5 million times by 3 million people, which they then used to compare the spread of news that had been verified as true with the spread of stories shown to be false. They found that whereas the truth rarely reached more than 1000 Twitter users, the most pernicious false news stories—like the Mayweather tale—routinely reached well over 10,000 people. False news propagated faster and wider for all forms of news—but the problem was particularly evident for political news, the team reports today in Science.

At first the researchers thought that bots might be responsible, so they used sophisticated bot-detection technology to remove social media shares generated by bots. But the results didn’t change: False news still spread at roughly the same rate and to the same number of people. By default, that meant that human beings were responsible for the virality of false news.

That got the scientists thinking about the people involved. It occurred to them that Twitter users who spread false news might have more followers. But that turned out to be a dead end: Those people had fewer followers, not more.

Finally the team decided to look more closely at the tweets themselves. As it turned out, tweets containing false information were more novel—they contained new information that a Twitter user hadn’t seen before—than those containing true information. And they elicited different emotional reactions, with people expressing greater surprise and disgust. That novelty and emotional charge seem to be what’s generating more retweets. “If something sounds crazy stupid you wouldn’t think it would get that much traction,” says Alex Kasprak, a fact-checking journalist at Snopes in Pasadena, California. “But those are the ones that go massively viral.”

The research gives you a sense of how much of a problem fake news is, both because of its scale and because of our own tendencies to share misinformation, says David Lazer, a computational social scientist at Northeastern University in Boston who co-wrote a policy perspective on the science of fake news that was also published today in Science. He thinks that, in the short term, the “Facebooks, Googles, and Twitters of the world” need to do more to implement safeguards to reduce the magnitude of the problem. But in the long term we also need more science, he says—because if we don’t understand where fake news comes from and how it spreads, then how can we possibly combat it?

Source: Science Magazine

Leave a Comment

You must be logged in to post a comment.