Although the scientists behind it would really rather you didn’t

An aspect of artificial intelligence that’s sometimes overlooked is just how good it is at creating fake audio and video that’s difficult to distinguish from reality. The advent of Photoshop got us doubting our eyes, but what happens when we can’t rely on our other senses?

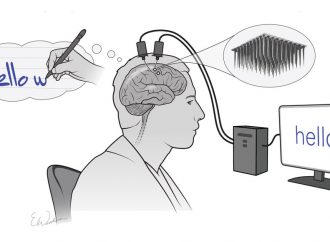

The latest example of AI’s audiovisual magic comes from the University of Washington, where researchers have created a new tool that takes audio files, converts them into realistic mouth movements, and then grafts those movements onto existing video. The end-result is a video of someone saying something they didn’t. (Not at the time, anyway.) It’s a confusing process to understand by just reading about it, so take a look at the video below:

You can see two side-by-side clips of Barack Obama. The one on the left is the source for the audio, and the one on the right is from a completely different speech, with the researchers’ algorithms use to graft new mouth shapes onto the footage. The resulting video isn’t perfect (Obama’s mouth movements are a little blurry — a common problem with AI-generated imagery) but overall it’s pretty convincing.

The researchers said they used Obama as a test subject for this work because high-quality video footage of the former president is plentiful, which makes training the neural networks easier. Seventeen hours of footage were needed as data to track and replicate his mouth movements, researcher Ira Kemelmacher told The Verge over email, but in future this training constraint could be reduced to just an hour.

The team behind the work say they hope it could be used to improve video chat tools like Skype. Users could collect footage of themselves speaking, use to train the software, and then when they need to talk to someone, video on their side would be generated automatically using just their voice. This would help in situations where someone’s internet connection is shaky, or if they’re trying to save mobile data.

Of course, there’s also the worry that tools like this can and will be used to generate misleading video footage — the sort of stuff that would give some real heft to the term “fake news.” Combine a tool like this with technology that can recreate anyone’s voice using just a few minutes of sample audio and you’d be forgiven for thinking there are scary times ahead. Similar research has been able to change someone’s facial expression in real-time; create 3D models of faces from a few photographs; and more.

The team from the University of Washington is understandably keen to distance themselves from these sorts of uses, and make it clear they only trained their neural nets on Obama’s voice and video. (“You can’t just take anyone’s voice and turn it into an Obama video,” said professor Steve Seitz in a press release. “We very consciously decided against going down the path of putting other people’s words into someone’s mouth.”) But in theory, this tech could be used to map anyone’s voice onto anyone’s face, will everyone be so scrupulous if the technology becomes widespread?

Source: The Verge

Leave a Comment

You must be logged in to post a comment.