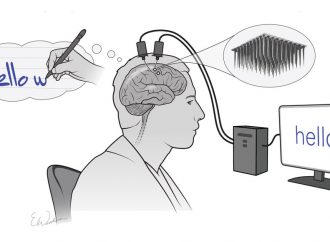

In a twist on artificial intelligence (AI), computer scientists have programmed machines to be curious—to explore their surroundings on their own and learn for the sake of learning.

The new approach could allow robots to learn even faster than they can now. Someday they might even surpass human scientists in forming hypotheses and pushing the frontiers of what’s known.

“Developing curiosity is a problem that’s core to intelligence,” says George Konidaris, a computer scientist who runs the Intelligent Robot Lab at Brown University and was not involved in the research. “It’s going to be most useful when you’re not sure what your robot is going to have to do in the future.”

Over the years, scientists have worked on algorithms for curiosity, but copying human inquisitiveness has been tricky. For example, most methods aren’t capable of assessing artificial agents’ gaps in knowledge to predict what will be interesting before they see it. (Humans can sometimes judge how interesting a book will be by its cover.)

Todd Hester, a computer scientist currently at Google DeepMind in London hoped to do better. “I was looking for ways to make computers learn more intelligently, and explore as a human would,” he says. “Don’t explore everything, and don’t explore randomly, but try to do something a little smarter.”

So Hester and Peter Stone, a computer scientist at the University of Texas in Austin, developed a new algorithm, Targeted Exploration with Variance-And-Novelty-Intrinsic-Rewards (TEXPLORE-VENIR), that relies on a technique called reinforcement learning. In reinforcement learning, a program tries something, and if the move brings it closer to some ultimate goal, such as the end of a maze, it receives a small reward and is more likely to try the maneuver again in the future. DeepMind has used reinforcement learning to allow programs to master Atari games and the board game Go through random experimentation. But TEXPLORE-VENIR, like other curiosity algorithms, also sets an internal goal for which the program rewards itself for comprehending something new, even if the knowledge doesn’t get it closer to the ultimate goal.

As TEXPLORE-VENIR learns and builds a model of the world, it rewards itself for discovering information that’s unlike what’s seen before—for example, finding distant spots on a map or, in culinary application, exotic recipes. It also rewards itself for reducing uncertainty—for becoming familiar with those places and recipes. “They’re fundamentally different types of learning and exploration,” Konidaris says. “Balancing them is really important. And I like that this paper did both of those.”

Hester and Stone tested their method in two scenarios. The first was a virtual maze consisting of a circuit of four rooms connected by locked doors. The bot—just a computer program—had to find a key, pick it up, and use it to unlock a door. Each time it passed through a door it earned 10 points, and it had 3000 steps to achieve a high score. If the researchers first let the bot explore for 1000 steps guided only by TEXPLORE-VENIR it earned about 55 door points on average during the 3000-step test phase. If the bot used other curiosity algorithms for such exploration, its score during the test phase ranged from zero to 35—except for when it used one called R-Max, which also earned the bot about 55 points. In a different setup, in which the bot had to simultaneously explore and pass through doors, TEXPLORE-VENIR earned about 70 points, R-Max earned about 35, and the others earned fewer than five, the researchers report in the June issue of Artificial Intelligence.

The researchers then tried their algorithm with a physical robot, a humanoid toy called the Nao. In three separate tasks, the half-meter-tall machine earned points for hitting a cymbal, for holding pink tape on its hand in front of its eyes, or for pressing a button on its foot. For each task it had 200 steps to earn points, but first had 400 steps to explore, either randomly or using TEXPLORE-VENIR. Averaged over 13 trials with each of the two methods, Nao was better at finding the pink tape on its hand after exploring with TEXPLORE-VENIR than after exploring randomly. It pressed the button on seven of 13 trials after using TEXPLORE-VENIR but not at all after exploring randomly. And Nao hit the cymbal in one of five trials after using TEXPLORE-VENIR, but never after exploring randomly. Through semistructured experimentation with its own body and environment, TEXPLORE-VENIR was well-prepared for the assigned tasks, just as babies “babble” with their limbs before they learn to crawl.

But curiosity can kill the bot, or at least its productivity. If the intrinsic reward for learning is too great, it may ignore the extrinsic reward, says Andrew Barto, computer scientist at the University of Massachusetts in Amherst who co-wrote the standard textbook on reinforcement learning and is an unpaid adviser to a company Stone is starting. In fact, R-Max earned fewer points when exploration was simultaneously added to door-unlocking because it was distracted by its own curiosity, a kind of AI ADD. On the other hand, extrinsic rewards can interfere with learning, Barto says. “If you give grades or stars, a student may work for those rather than for his or her own satisfaction.” So an outstanding challenge in training robots is to find the right balance of internal and external rewards.

Intelligently inquisitive bots and robots could show flexible behavior when doing chores at home, designing efficient manufacturing processes, or pursuing cures for diseases. Hester says a next step would be to use deep neural networks, algorithms modeled on the brain’s architecture, to better identify novel areas to explore, which would incidentally advance his own quest: “Can we make an agent learn like a child would?”

Source: Science Mag

Leave a Comment

You must be logged in to post a comment.