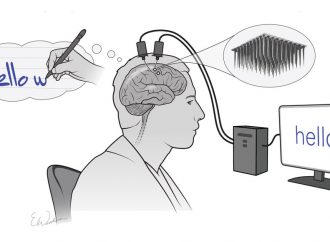

A deep learning system generates the next few frames of a story based on just one image, helping it to predict the future and understand the present

AN ARTIFICIAL intelligence system can predict how a scene will unfold and dream up a vision of the immediate future.

Given a still image, the deep learning algorithm generates a mini video showing what could happen next. If it starts with a picture of a train station, it might imagine the train pulling away from the platform, for example. Or an image of a beach could inspire it to animate the motion of lapping waves.

Teaching AI to anticipate the future can help it comprehend the present. To understand what someone is doing when they’re preparing a meal, we might imagine that they will next eat it, something which is tricky for an AI to grasp. Such a system could also let an AI assistant recognise when someone is about to fall, or help a self-driving car foresee an accident.

“Any robot that operates in our world needs to have some basic ability to predict the future,” says Carl Vondrick at the Massachusetts Institute of Technology, part of the team that created the new system. “For example, if you’re about to sit down, you don’t want a robot to pull the chair out from underneath you.”

Vondrick and his colleagues will present their work at a neural computing conference in Barcelona, Spain, on 5 December.

To develop their AI, the team trained it on 2 million videos from image sharing site Flickr, featuring scenes such as beaches, golf courses, train stations and babies in hospital. These videos were unlabelled, meaning they were not tagged with information to help an AI understand them. After this, the researchers gave the model still images and it produced its own micro-movies of what might happen next.

“One network generates the videos, and the other judges whether they look real or fake”

To teach the AI to make better videos, the team used an approach called adversarial networks. One network generates the videos, and the other judges whether they look real or fake. The two get locked in competition: the video generator tries to make videos that best fool the other network, while the other network hones its ability to distinguish the generated videos from real ones.

At the moment, the videos are low-resolution and contain 32 frames, lasting just over 1 second. But they are generally sharp and show the right kind of movement for the scene: trains move forward in a straight trajectory while babies crumple their faces. Other attempts to predict video scenes, such as one by researchers at New York University and Facebook, have required multiple input frames and produced just a few future frames that are often blurry.

Rules of the world

The videos still seem a bit wonky to a human and the AI has lots left to learn. For instance, it doesn’t realise that a train leaving a station should also eventually leave the frame. This is because it has no prior knowledge about the rules of the world; it lacks what we would call common sense. The 2 million videos – about two years of footage – are all the data it has to go on to understand how the world works. “That’s not that much in comparison to, say, a 10-year-old child, or how much evolution has seen,” says Vondrick.

That said, the work illustrates what can be achieved when computer vision is combined with machine learning, says John Daugman at the University of Cambridge Computer Laboratory.

He says that a key aspect is an ability to recognise that there is a causal structure to the things that happen over time. “The laws of physics and the nature of objects mean that not just anything can happen,” he says. “The authors have demonstrated that those constraints can be learned.”

Vondrick is now scaling up the system to make larger, longer videos. He says that while it may never be able to predict exactly what will happen, it could show us alternative futures. “I think we can develop systems that eventually hallucinate these reasonable, plausible futures of images and videos.”

Source: New Scientist

Leave a Comment

You must be logged in to post a comment.